Remember the end of 2001: A Space Odyssey? How HAL wouldn’t let Dave back onto the ship? In the unlikely event that you’re drawing a blank, it went like this:

Dave Bowman: Hello, HAL. Do you read me, HAL?

HAL: Affirmative, Dave. I read you.

Dave Bowman: Open the pod bay doors, HAL.

HAL: I’m sorry, Dave. I’m afraid I can’t do that.

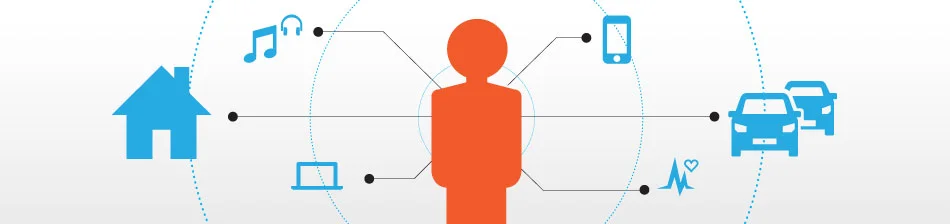

Now, if this scene was being written today, Dave wouldn’t have even needed to ask for HAL to open the pod bay doors, because the doors themselves would have sensed his presence, anticipated his intention to enter the ship, and opened by themselves. In the age of the Internet of Things, our doors, lights, windows, cars, and wearables can all be taught how to anticipate our needs and react appropriately. Instead of needing to interface with a main hub, like HAL, more and more, the user will find herself at the center of an invisible system composed of integrated networked devices designed to predict and meet her needs.

Google Now, which is accessed from your smartphone or tablet, is a good example of the type of anticipatory computing available to users today. First, you give Google access to your Internet data– email, contacts, location, browsing history, calendar, social media, etc. Next, Google Now uses this data to provide you with “Just the right information at just the right time.” — hyperlocal new, weather reports, reminders, sports updates, traffic reports — all presented at the exact moment, without you having to ask for it.

SmartThings is company that promises “a safer smarter home in the palm of your hand”. The SmartThings app can monitor the security of your home by giving you notifications about who arrives and leaves, or letting you know if you’ve left your garage door unlocked. It’ll turn on your heat or AC at just the right time, and even brew your coffee before you wake up. SmartThings’ vision is to bring the Internet of Things to homes everywhere, making it the new standard.

How does all this work?

Sensors coupled with data-mining make anticipatory computing possible. Smartphones come out of the box loaded with sensors, an accelerometer, a gyroscope, an ambient light sensor, a proximity sensor, a magnetometer, a blood pressure sensor, a sweat sensor, and more. The Samsung Galaxy S5 boasts no less than ten sensors. By mining data about your location, your interests and the members of your social networks, your phone gains situational awareness. This type of sensor fusion has incredible potential. It gives your smartphone the ability to alert you to which guy at the party you should approach to discuss a potential deal. Add info on your emotional state via tapping data from your cardio, blood pressure and sweat sensors and your phone can even tell you the optimal time of the night to approach him. Maybe by triangulating all this data, your phone could even help you find your potential husband or wife!

Anticipatory computing is not about staring at a screen. This type of technology is embedded in all your networked devices and these are seamlessly integrated into your environment, meaning your stuff. Here’s where we make that jump from sci-fi to real life. Our possessions are becoming able to sense our needs and respond to them, which means that they function as extensions of ourselves, so we all become networked organisms.

So, what does that mean for UX design? If we’re moving away from the GUI and more toward invisible technology, does this mean we’re staring down the end of UX? Nah, of course not. UX will always be a part of technology. We think it’s just starting to get interesting. More and more, UX is going to be about responsive design and other methods of designing for multiple platforms. It may be true that our day-to-day experience with screens is in the process of evolving. As the Internet of Things sees more and more of our home/office devices wired to the cloud and automated to suit our movements, certain interactions with a GUI will be less frequent, however, this doesn’t mean that top notch UX is no longer relevant. On the contrary, as more and more devices are tethered to the cloud, the need for clean and intuitive UX becomes paramount. If the idea is for all our devices and appliances to be part of a unified system, configuration screens and the like will need adapt some sort of universal look and feel. Cross-platform compatibility will never stand such a challenge and we think this is very exciting.

All our devices will be mapped onto data that lives online which will make our physical possessions programmable, adaptive and customizable. This is great news for those who relate to and rely on the cloud. For those who feel disconcerted by all this connectivity (dare I say surveillance?), it’s a step in the direction of Big Brother watching, for sure.

We wonder what HAL would think of these new and exciting computing developments. How far away are we from true robotic intelligence? In closing, here’s the rest of HAL’s conversation with Dave. Food for thought.

Dave Bowman: What’s the problem?

HAL: I think you know what the problem is just as well as I do.

Dave Bowman: What are you talking about, HAL?

HAL: This mission is too important for me to allow you to jeopardize it.

Dave Bowman: I don’t know what you’re talking about, HAL.

HAL: I know that you and Frank were planning to disconnect me, and I’m afraid that’s something I cannot allow to happen.

We’re really excited about the new developments in anticipatory computing. If you’d like to chat with us more about how anticipatory computing can work for you, contact us.